A couple of weeks ago, me and my Neo4j Breakfast Club friends were just freewheeling our way into the day, and one of my colleagues started talking to me about Conway's Game of Life.. I had never heard of this thing, but was immediately fascinated. It basically allows you to simulate evolution in a rudimentary and simplified kind of way, but it's really fascinating how it works based on a very simple set of rules (see below). There's an entire Wiki dedicated just to this "game" - it's one of the most wonderful rabbitholes on the web that I have ever seen. Just take a look at this example and you will see the idea in action:

The Game of Life, also known simply as Life, is a cellular automaton devised by the British mathematician John Horton Conway in 1970. It is a zero-player game, meaning that its evolution is determined by its initial state, requiring no further input. One interacts with the Game of Life by creating an initial configuration and observing how it evolves. It is Turing complete and can simulate a universal constructor or any other Turing machine.

So when I heard about it, I immediately thought that it would be a ton of fun to run this experiment in

Neo4j. Why? Because the rules are all about connections between members of a population. Things will evolve - or not - based on their connectivity.

The rules of the Game

The whole idea of the Game is that you will create some kind of a "population" of cells in a matrix of cells. Every cell will have a maximum of 8 neighbours, and will be evolving it's state (either Dead or Live) with every iteration.

So at every "turn", the game will evaluate what will happen to every cell based on a very simple set of rules:

- Any live cell with fewer than two live neighbours dies, as if by underpopulation.

- Any live cell with two or three live neighbours lives on to the next generation.

- Any live cell with more than three live neighbours dies, as if by overpopulation.

- Any dead cell with exactly three live neighbours becomes a live cell, as if by reproduction.

So then you can actually see what would happen to a population and simulate it's evolution. I figured how hard could it be to emulate this in a graph, as clearly the connectivity between cells and their neighbours would lend itself to some serious graphiness.

So I took this idea for a spin.

Simulating the Game of Life in Neo4j

First we start by setting up that database.

Setup the database and the indexes

:use system;

create or replace database neo4j;

:use neo4j;

create index for (c:Cell) on c.x;

create index for (c:Cell) on c.y;

Then we can create the "field" that we will be playing the game in.

Create the 25x25 matrix and connect the cells

We can do this in one query, which included two steps:

- we first create the cells

- we connect the cells using the

NEIGHBOUR_OF relationship

UNWIND range(1,25) as x

UNWIND range (1,25) as y

CREATE (c:Cell:Dead {x: x, y: y})

WITH c

MATCH (c2:Cell)

WHERE c2.x-1<=c.x<=c2.x+1

AND c2.y-1<=c.y<=c2.y+1

AND id(c)<id(c2)

MERGE (c)-[:NEIGHBOUR_OF]->(c2);

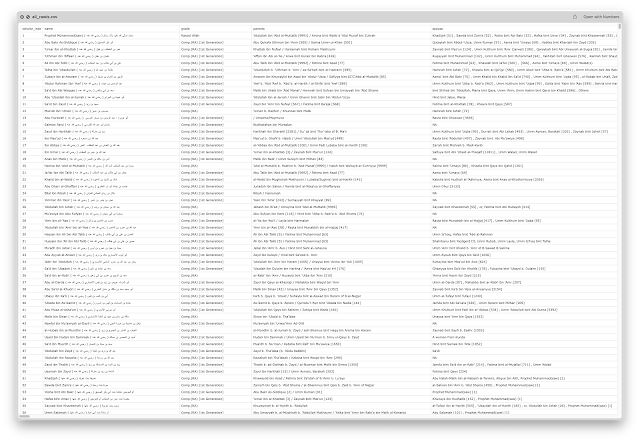

The graph then looks like this:

Now we are ready to start playing the game.

Seeding the graph with live cells

In the Game of Life, there's always a need to introduce the starting state of the field. We call this the seeding of the game, and there are obviously lots of ways that we could do that. Here, I will start by setting approximately 20% of the cells/nodes to Live, randomly throughout the field.

:auto UNWIND range(1,200) as range

CALL {

WITH range

MATCH (c:Cell)

WHERE

c.x = round(rand()*25) AND

c.y = round(rand()*25)

SET c:Live

REMOVE c:Dead

} in transactions of 10 rows;

You can now see the difference

before and

after by running a simple query:

match p = ()-[r:NEIGHBOUR_OF]->() return p;

As you can see from the screenshot, the "

Live" nodes are a bit spread out in the field right now. This is actually a bit of an issue for the game - as it will immediately eliminate a huge part of the population upon the first iteration of executing the rules. Which in an of itself is interesting, but leads to very unpredictable behaviour in the rounds of the game. That's why my friend

https://twitter.com/mesirii handed me the suggestion of starting out with a different type of seeding for the field.

Alternative seeding strategy

Michael suggested that we would use an (https://conwaylife.com/wiki/R-pentomino)[R-Pentomino], the smallest 5 element starting point.

First we need to reset the field to all dead - all Cells need to have the Dead Label.

match (n:Cell)

remove n:Live

set n:Dead;

Then we create the R-Pentomino using this query:

UNWIND [[1,0],[2,0],[0,1],[1,1],[1,2]] as pento

MATCH (c:Cell {x: 5+pento[0], y:5+pento[1]})

SET c:Live

REMOVE c:Dead;

This then makes the field look very different:

With this setup, we can now start playing the game for real.

Actually playing the Game of Life

Now that we have the field in its starting state, we are going to start iterating from there on using the rules. This turned out to be a little more complicated than I thought, probably a bit above my paygrade, and hence I really have to thank https://twitter.com/mesirii again for his generous help with the iteration query below.

To start, let's look at current graph composition, by understanding how many Dead or Live cells there are currently in the system. We do that with a very simple query:

match (n)

return labels(n), count(n);

So let's start iterating by applying the

rules with a Cypher query that we will run time and again.

Iterate using the rules

The query below allows you to run iterations of the rules. Here's how it works:

- we first match for all the

Cell nodes

- then we evaluate a

Case to evaluate if a particular Cell node should stay "alive" or not. There are basically three cases:

- when a cell has 2 connections to a

:Live node, the state after the iteration will depend on whether or not the cell is :Live itself. If it is :Live, then the cell will stay alive. If it is not, then it will turn dead.

- when a cell has 3 connections, then the state after the iteration will be

:Live.

- when a cell has any other number of connections (less than 2, more than 3), then the cell will turn dead.

- once we have that outcome, we will have two subsequent subqueries in a

call statement, that will add and/or remove the :Live or :Dead labels.

It looks like this in Cypher:

match (c:Cell)

with c,

case size((c)-[:NEIGHBOUR_OF]-(:Live))

when 2 then c:Live

when 3 then true

else false

end as alive

call { with c, alive

WITH *

WHERE alive

SET c:Live

REMOVE c:Dead

}

call { with c, alive

WITH *

WHERE not alive

SET c:Dead

REMOVE c:Live

}

return labels(c), count(c);

Now, in order to make it easy to see how the game actually works, it's easier to create a Bloom perspective to look at the result - as Bloom will allow you to see the evolution of the graph without having to completely reload the entire visualisation. In the perspective, I have setup 2 search phrases:

- one to "Show the graph"

- one to run the above Cypher query that would iterate over the field and allow us to see the result

So now, when you run the

iterate search phrase time and time again, it will allow you to see the animation like this:

That brings me to the end of my fun experiment with our friend

John Horton Conway. It has been a fascinating journey for sure - and I am sure that there are many other things that we could do to make it even more interesting to run this simulation in Neo4j.

Hope this was as fun for you as it was for me. As always

All the best

Rik