Every now and again, my graph-nerves get itchy. It feels like I need to get my hands dirty again, and do some playing around with the latest and greatest version of

Neo4j. Now that I have a bit of a bitter team in Europe working on making Neo4j the best thing since sliced bread, that seems to become more and kore difficult to find the time to do that – but every now and again I just “get down on it” and take it for another spin.

So recently I was thinking about how Neo4j can actually help us with some of the fraud analysis and fraud detection use cases. This one has been getting a lot of attention recently, with the coming out of the

Swissleaks papers from the

International Consortium of Investigative Journalists (ICIJ). Our friends at

Linkurio.us did some amazing work there. And we also have some other users that are doing cool stuff with Neo4j,

OpenCorporates to name just one. So I wanted to do something in that “area” and started looking for a cool dataset.

The KBO dataset

I ended up downloading a dataset from the Belgian Ministry of Economics, who run the “Crossroads Database for Corporations” (“Kruispuntbank voor Ondernemingen”, in Dutch – aka as “the KBO”). Turns out that all of the Ministry’s data on corporations is publicly available. All you need to do is

register, and then you can download a ZIP file with all of the publicly registered organisations out there.

The full 200MB zip file contains a set of CSV files, holding all the data that we would possibly want for this exercise. Unzipped it’s about 1GB of data, and there’s quite a lot of it as you can see from this overview:

So you can see that this is a somewhat of a larger dataset. About 22 million CSV lines that would need to get processed – so surely that would require some thinking … So I said “Challenge Accepted” and got going.

The KBO Model

The first thing I would need in any kind of import exercise would be a solid datamodel. So I thought a bit about what I wanted to do with the data afterwards, and I decided that it would be really interesting to look at two specific aspects in the data:

- The activitiy types of the organisations in the dataset. The dataset has a lot of data about activity categorisations – definitely something to explore.

- The addresses/locations of the organisations in the dataset. The thinking would be that I would want to understand interesting clusters of locations where lots of organisations are located.

So I created a model that could accommodate that. Here’s what I ended up with.

So as you can see, there’s quite a few entities here, and they essentially form 3 distinct but interconnected hierarchies:

- The “orange” hierarchy has all the addresses in Belgium where corporations are located.

- The “green” hierarchy has the different “Codes” used in the dataset, specifically the activity codes that use the NACE taxonomy.

- The “blue” hierarchy gives us a view of links between corporations/enterprises and establishments.

So there we had it. A sizeable dataset and a pretty much real-world model that is somewhat complicated. Now we could start thinking about the Import operations themselves.

Preparing Neo4j for the Import

Now, I have done some interesting “import” jobs before. I know that I need to take care when writing to a graph, as we are effectively doing write operations that have a lot more intricate work going on – we are writing data AND structure at the same time. So that means that we need to have some specific settings adjusted in Neo4j that would really work in our favour. Here’s a couple of things that I tweaked:

- In neo4j.properties, I adjusted the cache settings. Caching typically just introduces overhead, and when you are writing to the graph these cache really don’t help at all. So I added cache_type=weak to the configuration file.

- In neo4j-wrapper.conf, I adjusted the Java heap settings. While Neo4j is making great strides to making memory management less dependent on the Java heap, today, you should still assign a large enough heap for import operations. Now my machine only has 8GB of RAM, so I had to leave it at a low-ish 4GB on my machine. The way to force that heap size is by having the initial memory assignment be equal to the maximum memory assignment by adding two lines:

wrapper.java.initmemory=4096

wrapper.java.maxmemory=4096

to the configuration file.

That’s the preparatory work done. Now onto the actual import job.

Importing the data using Cypher’s Load CSV

The default tool for loading CSV files into Neo4j of course is Cypher’s “LOAD CSV” command. So of course that is what I used at first. I looked at the model, looked at the CSV files, and wrote the following Cypher statements to load the “green” part of the datamodel first – the code hierarchy. Here’s what I did:

create index on :CodeCategory(name);

using periodic commit 1000load csv with headers from"file:/…/code.csv" as csvwith distinct csv.Category as Categorymerge (:CodeCategory {name: Category});

Note the “with distinct” clause in this is really just geared to make the “merge” operation easier., as we will be ensuring uniqueness before doing the merge. The “periodic commit” allows us to batch update operations together for increased throughput.

So then we could continue with the rest of the code.csv file. Note that I am trying to make the import operations as simple as possible on every run – rather than trying to do everything in one go. This is just to make sure that we don’t run out of memory during the operation.

create index on :Code(name);

using periodic commitload csv with headers from"file:/…/code.csv" as csvwith distinct csv.Code as Codemerge (c:Code {name: Code});

using periodic commitload csv with headers from"file:/…/code.csv" as csvwith distinct csv.Category as Category, csv.Code as Codematch (cc:CodeCategory {name: Category}), (c:Code {name: Code})merge (cc)<-[:PART_OF]-(c);

create index on :CodeMeaning(description);

using periodic commitload csv with headers from"file:/…/code.csv" as csvmerge (cm:CodeMeaning {language: csv.Language, description: csv.Description});

using periodic commitload csv with headers from"file:/…/code.csv" as csvmatch (cc:CodeCategory {name: csv.Category})<-[:PART_OF]-(c:Code {name: csv.Code}), (cm:CodeMeaning {language: csv.Language, description: csv.Description})merge (c)<-[:MEANS]-(cm);

As you can see from the screenshot below, this takes a while.

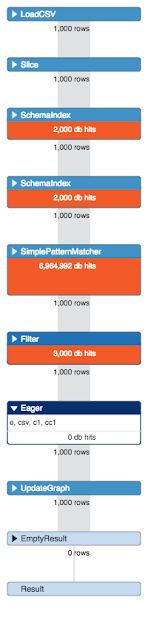

If we profile the query that is taking the time, then we see that it’s probably related to the CodeMeaning query – where we add Code-meanings to the bottom of the hierarchy. We see the “evil Eager” pipe come in, where we basically know that Cypher’s natural laziness is being overridden by a transactional integrity concern. It basically needs to pull everything into memory, taking a long time to do – even on this small data-file.

This obviously already caused me some concerns. But I continued to add the enterprises and establishments to the database in pretty much the same manner:

//load the enterprises

create constraint on (e:Enterprise)assert e.EnterpriseNumber is unique;

using periodic commit 5000load csv with headers from"file:/…/enterprise.csv" as csvcreate (e:Enterprise {EnterpriseNumber: csv.EnterpriseNumber, Status: csv.Status, JuridicalSituation: csv.JuridicalSituation, TypeOfEnterprise: csv.TypeOfEnterprise, JuridicalForm: csv.JuridicalForm, StartDate: toInt(substring(csv.StartDate,0,2))+toInt(substring(csv.StartDate,3,2))*100 + toInt(substring(csv.StartDate,6,4))*10000});

Note that I used a nice trick (described in

this blogpost) to convert the date information in the csv file to a number.

//load the establishments

create constraint on (eb:Establishment)assert eb.EstablishmentNumber is unique;

using periodic commitload csv with headers from"file:/…/establishment.csv" as csvcreate (es:Establishment {EstablishmentNumber: csv.EstablishmentNumber, StartDate: toInt(substring(csv.StartDate,0,2))+toInt(substring(csv.StartDate,3,2))*100+toInt(substring(csv.StartDate,6,4))*10000});

using periodic commitload csv with headers from"file:/…/establishment.csv" as csvmatch (e:Enterprise {EnterpriseNumber: csv.EnterpriseNumber}), (es:Establishment {EstablishmentNumber: csv.EstablishmentNumber})create (es)-[:PART_OF]->(e);

Interestingly, all of this is really fast.

Especially if you look at what was happening above with the Code-meanings. The reson for this is of course the fact that we are doing a lot simpler operations here while adding the data. The entire execution time on my little laptop was 7 minutes and 10 seconds to add 3180356 nodes and 1602575 relationshipts. Not bad at all.

At this point the model looks like this:

Then we start working with the address data, and start adding this to the database. It works very well for the Cities and Zipcodes:

create constraint on (c:City)

assert c.name is unique;

create constraint on (z:Zip)

assert z.name is unique;

create index on :Street(name);

//adding the cities

using periodic commit 100000

load csv with headers from

"file:/…/address.csv" as csv

with distinct toUpper(csv.MunicipalityNL) as MunicipalityNL

merge (city:City {name: MunicipalityNL});

//adding the zip-codes

using periodic commit 100000

load csv with headers from

"file:/…/address.csv" as csv

with distinct toUpper(csv.Zipcode) as Zipcode

merge (zip:Zip {name: Zipcode});

// connect the zips to the cities

using periodic commit 100000

load csv with headers from

"file:/…/address.csv" as csv

with distinct toUpper(csv.Zipcode) as Zipcode, toUpper(csv.MunicipalityNL) as MunicipalityNL

match (city:City {name: MunicipalityNL}), (zip:Zip {name: Zipcode})

create unique (city)-[:HAS_ZIP_CODE]->(zip);

Once we have this, we then wanted to add the streets to the Zip - not to the city, because we can have duplicate streetnames in different cities. And this is a problem:

It takes a very long time. The plan looks like this:

And unfortunately, it gets worse for adding the HouseNumbers to every street. It still works – but it’s painfully slow.

I tried of different things, called the

help of a friend, and finally got it to work by replacing “Merge”by “Create Unique”. That operation does a lot less checking on the total pattern that you are adding, and can therefore be more efficient. So oof. That worked.

Unfortunately we aren’t done yet. We still need to connect the Enterprises and Establishments to their addresses. And that’s where the proverbial sh*t hit the air rotating device – and when things stopped working. So we needed to address that, and that;s why there will be a part 2 to this blogpost very soon explaining what happened.

All of the material mentioned on this blog series is

on github if you want to take a look at it.

Hope you already found this useful. As always, comments are very welcome.

Cheers

Rik