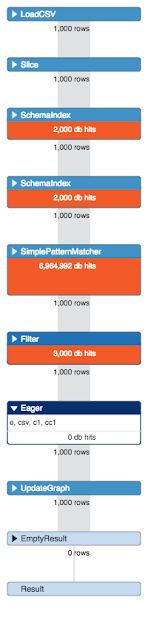

The thing is, that after having split the files into smaller chunks and iterating over them with Python - I still was not getting the performance I needed. Why o why is that? I looked at the profile of one of the problematic load scripts, and saw this:

I checked all of my setup multiple times, read and re-read Michael Hunger's fantastic Load CSV summary, and still was hitting problems that I should not be hitting. This is where I started looking at the query plan in more detail, and spotted the "Problem with Eager". I remembered reading one of Mark Needham's blogposts about "avoiding the Eager", and not fully understanding it as usual - but realizing that this must be what is causing the trouble. Let's drill into this a little more.

Trying to understand the "Eager Operation"

I had read about this before, but did not really understand it until Andres explained it to me again: in all normal operations, Cypher loads data lazily. See for example this page in the manual - it basically just loads as little as possible into memory when doing an operation. This laziness is usually a really good thing. But it can get you into a lot of trouble as well - as Michael explained it to me:"Cypher tries to honor the contract that the different operations within a statement are not affecting each other. Otherwise you might up with non-deterministic behavior or endless loops. Imagine a statement like this:

MATCH (n:Foo) WHERE n.value > 100 CREATE (m:Foo {m.value = n.value + 100});

If the two statements would not be isolated, then each node the CREATE generates would cause the MATCH to match again etc. an endless loop. That's why in such cases, Cypher eagerly runs all MATCH statements to exhaustion so that all the intermediate results are accumulated and kept (in memory).

Usually with most operations that's not an issue as we mostly match only a few hundred thousand elements max. With data imports using LOAD CSV, however, this operation will pull in ALL the rows of the CSV (which might be millions), execute all operations eagerly (which might be millions of creates/merges/matches) and also keeps the intermediate results in memory to feed the next operations in line. This also disables PERIODIC COMMIT effectively because when we get to the end of the statement execution all create operations will already have happened and the gigantic tx-state has accumulated."

So that's what's going on my load csv queries. MATCH/MERGE/CREATE caused an eager pipe to be added to the execution plan, and it effectively disables the batching of my operations "using periodic commit". Apparently quite a few users run into this issue even with seemingly simple LOAD CSV statements. Very often you can avoid it, but sometimes you can't."

These tools still offer a lot of functionality that is terribly useful at times - among which a cypher-based import command, the import-cypher command. Similar to LOAD CSV, the command has a batching option, that will "execute each statement individually (per csv-line) and then batch statements on the outside so they (unintentionally, because they were written long before load csv) they circumvent the eager problem by only having one row of input per execution". Nice - so this could actually solve it! Exciting.

Try something different: neo4j-shell-tools

So I was wondering if there were any other ways to avoid eager, or if there would be any way for the individual cypher statement to "touch" less of the graph. That's when I thought back to a couple of years back, when we did not have an easy and convenient tool like LOAD CSV yet. In those early days of import (it's actually hard to believe that this is just a few years back - man have we made a lot of progress since that time!!!) we used completely different tools. One of those tools were basically a plugin into the neo4j-shell, called the, neo4j-shell-tools.These tools still offer a lot of functionality that is terribly useful at times - among which a cypher-based import command, the import-cypher command. Similar to LOAD CSV, the command has a batching option, that will "execute each statement individually (per csv-line) and then batch statements on the outside so they (unintentionally, because they were written long before load csv) they circumvent the eager problem by only having one row of input per execution". Nice - so this could actually solve it! Exciting.

So then I spent about 30 mins rewriting the load csv statements as shell-tools commands. Here's an example:

//connect the Establishments to the addresses

import-cypher -i /<path>/sourcecsv/address.csv -b 10000 -d , -q with distinct toUpper({Zipcode}) as Zipcode, toUpper({StreetNL}) as StreetNL, toUpper({HouseNumber}) as HouseNumber, {EntityNumber} as EntityNumber match (e:Establishment {EstablishmentNumber: EntityNumber}), (street:Street {name: StreetNL, zip:Zipcode})<-[:PART_OF]-(h:HouseNumber {houseNumber: HouseNumber}) create (e)-[:HAS_ADDRESS]->(h);

In this command the -i indicates the source file, -b the REAL batch size of the outside commit, -d the delimiter, and finally -q the fact that the source file is quoted. Executing this in the shell was dead easy of course, and immediately also provides nice feedback of the progress:

Just a few minutes later, everything was processed.

So this allowed us to quickly and conveniently execute all of the import statements in one convenient go. Once we had connected all the Enterprises and Establishments to the addresses, the model looks like this:

So then all that is left it to do was to connect Enterprises and Establishments to the activities:

The total import time of this entire dataset - on my Macbook Air 11 was about 3 hours - without any hickups whatsoever.

So that was a very interesting experience. Had to try lots of different approaches - but managed to get the job done.

As with the previous parts of this blog series, you can find all of the scripts etc on this gist.

In the last section of this series, we will try to summarize our lessons learnt. In any case I hope this has been a learning experience for you as well as it was for me.

Cheers

Rik

So this allowed us to quickly and conveniently execute all of the import statements in one convenient go. Once we had connected all the Enterprises and Establishments to the addresses, the model looks like this:

So then all that is left it to do was to connect Enterprises and Establishments to the activities:

The total import time of this entire dataset - on my Macbook Air 11 was about 3 hours - without any hickups whatsoever.

So that was a very interesting experience. Had to try lots of different approaches - but managed to get the job done.

As with the previous parts of this blog series, you can find all of the scripts etc on this gist.

In the last section of this series, we will try to summarize our lessons learnt. In any case I hope this has been a learning experience for you as well as it was for me.

Cheers

Rik

No comments:

Post a Comment